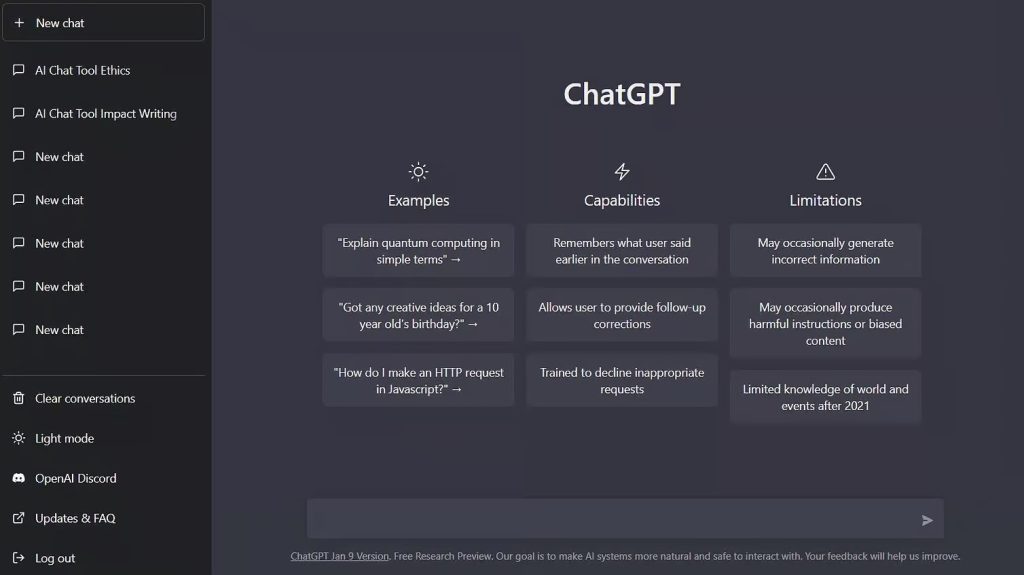

Buried in a panel called Learning in the Age of AI and Technology at SXSW EDU, Paul Kim, the Chief Technology Officer at Stanford University discussed the way that he trains his students to use ChatGPT.

Grading ChatGPT.

He makes his students use ChatGPT to write an essay, document the prompt that they submit to ChatGPT, print the entire response ChatGPT gives, critique the result, and then write an improved version.

There is a lot going on in a multi-stage assignment like this one.

First there is prompt engineering. The idea of prompt engineering has been around for a bit, but with the advent of ChatGPT its utility has exploded. Suddenly it is easy to produce paragraphs of text, but hard to get it to produce exactly the kind of paragraphs of text that you want it to. The basic idea is that the more work you can get ChatGPT (or any generative AI) to do, the quicker you will have a finished product. It is easy to get ChatGPT to write a generic draft, but getting an essay with any emotion or style is hard.

It can help to ask it to write an essay “as if it were” a writer that you like. It can help to prime the pump a bit and create some context for the model. It can really help to use some of the built in codes and commands that address how GPT-3 and GPT-4 work under the hood. Improving the student’s ability to prompt engineer might be the most important single skill to have on their resume in just a couple years.

The second stage is to critique the response ChatGPT gives. ChatGPT is good at confidently giving misinformation. It is not exactly a lie because ChatGPT doesn’t compare its knowledge to objective fact (for the most part). So taking an essay output and grading it both on facts and style puts several other tools to use. A student needs to know enough English and writing to say what is boring sentence structure or uninspired text. And they need to know enough about the underlying topic to see if it got anything wrong. If the goal of the essay is to convince and not just to inform, then the student also needs to know how to utilize “we” or create urgency so that the audience can be more convinced than the sterile “list of reasons” that ChatGPT usually gives.

Finally the student has to use their own feedback and write an improved essay. Theoretically they could utilize ChatGPT to do this, or at least do part of this, but even that could be part of the assignment. Iterating on prompts they have submitted in the past to get the AI to do a better job at producing an essay is such a useful skill that it would be hard to call this “cheating”.

The most fascinating part of an assignment like this is how multidisciplinary it is. It fits easily into any Social Studies, History, English, Literature, or Government course at both the K-12 and Higher Ed levels. Students would have to use skills learned in other classes, and work on skills they didn’t have before, to create something that is high quality. And students can rely on whichever skill in the chain they are the strongest at, continuing to build mastery in areas they already excel in, rather than being forced to spend hours doing things that they don’t find compelling.

Truth be told, it could even be used in corporate professional development sessions as a way to teach lots of students prompt engineering. And if you leverage peer review and grading, the whole project wouldn’t necessarily take that much in terms of resources.

This might be one of the single most useful, and relatively cheat-proof, ways to use ChatGPT in any class that involves writing.

More Stories

A Boomer’s view of Student Debt

Student loan forgiveness has been in the news again recently with the White House announcing $5.8b additional student loan debt...

Justin Reich on Learning Loss, Subtraction in Action, and a future with much more disrupted schooling

Justin Reich is an education and technology researcher and the director of MIT’s Teaching Systems Lab. He hosts a podcast...

Esports could help re-diversify a shrunken curriculum

Esports and schools feel like a pretty strange fit. Regular sports have always gone with schools, but adding esports still...

People aren’t flipping out for flipped classrooms like they used to

Flipped learning, a new strategy for teaching that flips the traditional idea of classroom lecture followed by homework on its...

Mixed Reality in the Classroom: 5 Revolutionary Learning Experiences

We are entering a period where Mixed Reality (MR) is becoming more and more common. At E3D News, we have...

Exponential Destiny

Earlier this month we attended Augmented World Expo, AWE and one of the most interesting companies was Exponential Destiny. Exponential...